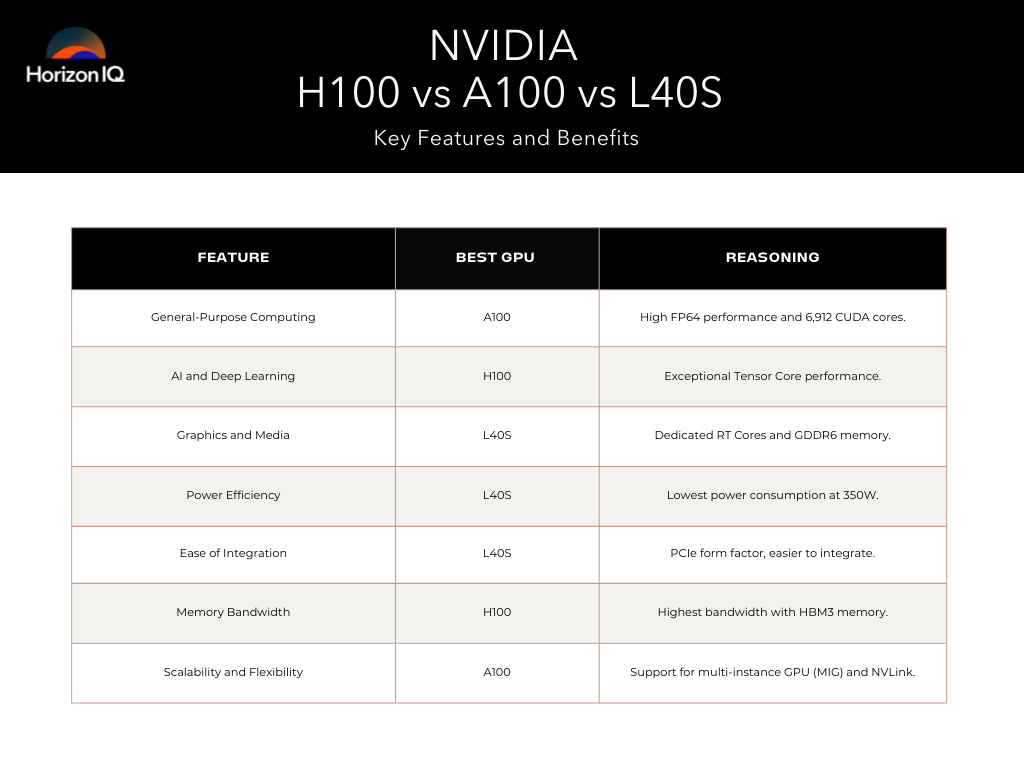

What’s the difference between the NVIDIA H100 vs A100 vs L40S? At HorizonIQ, we understand the confusion that can arise when choosing the right GPU. The high-performance computing and AI training landscape is rapidly evolving, driven by continuous advancements in GPU technology.

Each GPU offers unique features and capabilities tailored to different workloads, so it’s best to carefully assess your computing requirements before implementation. Let’s dive into the specifics of these GPUs, comparing them across various parameters to help you decide which one suits your needs and use cases.

NVIDIA H100 vs A100 vs L40S GPU Specs

| Feature | A100 80GB SXM | H100 80GB SXM | L40S |

| GPU Architecture | Ampere | Hopper | Ada Lovelace |

| GPU Memory | 80GB HBM2e | 80GB HBM3 | 48GB GDDR6 |

| Memory Bandwidth | 2039 GB/s | 3352 GB/s | 864 GB/s |

| L2 Cache | 40MB | 50MB | 96MB |

| FP64 Performance | 9.7 TFLOPS | 33.5 TFLOPS | N/A |

| FP32 Performance | 19.5 TFLOPS | 66.9 TFLOPS | 91.6 TFLOPS |

| RT Cores | N/A | N/A | 212 |

| TF32 Tensor Core | 312 TFLOPS | 989 TFLOPS | 366 TFLOPS |

| FP16/BF16 Tensor Core | 624 TFLOPS | 1979 TFLOPS | 733 TFLOPS |

| FP8 Tensor Core | N/A | 3958 TFLOPS | 1466 TFLOPS |

| INT8 Tensor Core | 1248 TOPS | 3958 TOPS | 1466 TOPS |

| Media Engine | 0 NVENC, 5 NVDEC, 5 NVJPEG | 0 NVENC, 7 NVDEC, 7 NVJPEG | 0 NVENC, 5 NVDEC, 5 NVJPEG |

| Power Consumption | Up to 400W | Up to 700W | Up to 350W |

| Form Factor | SXM4 | SXM5 | Dual Slot PCIe |

| Interconnect | PCIe 4.0 x16 | PCIe 5.0 x16 | PCIe 4.0 x16 |

How do these GPUs perform in general-purpose computing?

A100: Known for its versatility, the A100 is built on the Ampere architecture and excels in both training and inference tasks. It offers robust FP64 performance, making it well-suited for scientific computing and simulations.

H100: Leveraging the Hopper architecture, the H100 significantly enhances performance, particularly for AI and deep learning applications. Its FP64 and FP8 performance are notably superior, making it ideal for next-gen AI workloads.

L40S: Based on the Ada Lovelace architecture, the L40S is optimized for a blend of AI, graphics, and media workloads. It lacks FP64 performance but compensates with outstanding FP32, mixed precision performance, and cost efficiency.

Pro tip: The A100’s flexibility and versatile performance makes it a great choice for general-purpose computing. With high FP64 capabilities, it excels in training, inference, and scientific simulations.

Which GPU is better for AI and deep learning?

H100: Offers the highest Tensor Core performance among the three, making it the best choice for demanding AI training and inference tasks. Its advanced Transformer Engine and FP8 capabilities set a new benchmark in AI computing.

A100: Still a strong contender for AI workloads, especially where precision and memory bandwidth are critical. It is well-suited for established AI infrastructures.

L40S: Provides a balanced performance with excellent FP32 and Tensor Core capabilities, suitable for versatile AI tasks. It is an attractive option for enterprises looking to upgrade from older GPUs in a more cost efficient way than the H100 or A100.

Pro tip: For demanding AI training tasks, the H100 with its superior Tensor Core performance and advanced capabilities is a clear winner.

How do these GPUs compare in graphics and media workloads?

L40S: Equipped with RT Cores and ample GDDR6 memory, the L40S excels in graphics rendering and media processing, making it ideal for applications like 3D modeling and video rendering.

A100 and H100: Primarily designed for compute tasks, they lack dedicated RT Cores and video output, limiting their effectiveness in high-end graphics and media workloads.

Pro tip: The L40S is best for graphics and media workloads. Due to its RT Cores and ample GDDR6 memory, it’s ideal for tasks like 3D modeling and video rendering.

What are the power and form factor considerations?

A100: Consumes up to 400W and utilizes the SXM4 form factor, which is compatible with many existing server setups.

H100: The most power-hungry, requiring up to 700W, and uses the newer SXM5 form factor, necessitating compatible hardware.

L40S: The most power-efficient at 350W, fitting into a dual-slot PCIe form factor, making integrating a wide range of systems easier.

Pro tip: If cooling is a concern, the L40S has the best thermal management.

What are the benefits of each GPU?

A100 Benefits

- Versatility across a range of workloads.

- Robust memory bandwidth and FP64 performance.

- Established and widely supported in current infrastructures.

H100 Benefits

- Superior AI and deep learning performance.

- Advanced Hopper architecture with high Tensor Core throughput.

- Excellent for cutting-edge AI research and applications.

L40S Benefits

- Balanced performance for AI, graphics, and media tasks.

- Lower power consumption and easier integration.

- Cost-effective upgrade path for general-purpose computing.

What are the pros and cons of each NVIDIA GPU?

H100

- Pros: Superior performance for large-scale AI, advanced memory, and tensor capabilities.

- Cons: High cost, limited availability.

A100

- Pros: Versatile for both training and inference, balanced performance across workloads.

- Cons: Still relatively expensive, mid-range availability.

L40S

- Pros: Versatile for AI and graphics, cost-effective for AI inferencing, and good availability.

- Cons: Lower tensor performance compared to H100 and A100, less suited for large-scale model training.

Real-world use cases: NVIDIA H100 vs A100 vs L40S GPUs

The NVIDIA H100, A100, and L40S GPUs have found significant applications across various industries.

The H100 excels in cutting-edge AI research and large-scale language models, the A100 is a favored choice in cloud computing and HPC, and the L40S is making strides in graphics-intensive applications and real-time data processing.

These GPUs are not only enhancing productivity but also driving innovation across different industries. Here are a few examples of how NVIDIA GPUs are making an impact:

NVIDIA H100 in Action

Inflection AI: Inflection AI, backed by Microsoft and Nvidia, plans to build a supercomputer cluster using 22,000 Nvidia H100 compute GPUs (potentially rivaling the performance of the Frontier supercomputer). This cluster marks a strategic investment in scaling speed and capability for Inflection AI’s products—notably its AI chatbot Pi.

Meta: To support its open-source artificial general intelligence (AGI) initiatives, Meta plans to purchase 350,000 Nvidia H100 GPUs by the end of 2024. Meta’s significant investment is driven by its ambition to enhance its infrastructure for advanced AI capabilities and wearable AR technologies.

NVIDIA A100’s Broad Impact

Microsoft Azure: Microsoft Azure integrates the A100 GPUs into its services to facilitate high-performance computing and AI scalability in the public cloud. This integration supports various applications, from natural language processing to complex data analytics.

NVIDIA’s Selene Supercomputer: Selene, an NVIDIA DGX SuperPOD system, uses A100 GPUs and has been instrumental in AI research and high-performance computing (HPC). Notably, it has set records in training times for scientific simulations and AI models—Selene is No. 5 on the Top500 list for the fastest industrial supercomputer.

Emerging Use Cases for NVIDIA L40S

Animation Studios: The NVIDIA L40S is being widely adopted in animation studios for 3D rendering and complex visual effects. Its advanced capabilities in handling high-resolution graphics and extensive data make it ideal for media and gaming companies creating detailed animations and visual content.

Healthcare and Life Sciences: Healthcare organizations are leveraging the L40S for genomic analysis and medical imaging. The GPU’s efficiency in processing large volumes of data is accelerating research in genetics and improving diagnostic accuracy through enhanced imaging techniques.

Which GPU is right for you?

The choice between the NVIDIA H100, A100, and L40S depends on your workload requirements, specific needs, and budget.

- Choose the H100 if you need the highest possible performance for large-scale AI training and scientific simulations—and budget is not a constraint.

- Choose the A100 if you require a versatile GPU that performs well across a range of AI training and inference tasks—get a balance between performance and cost.

- Choose the L40S if you need a cost-effective solution for balancing AI inferencing, data center graphics, and real-time applications.

The HorizonIQ Difference

At HorizonIQ, we’re excited to bring NVIDIA’s technology to our bare metal servers. Our goal is to help you find the right fit at some of the most competitive prices on the market.

Whether expanding AI capabilities or running graphic-intensive workloads, our flexible and reliable single-tenant infrastructure offers the performance you need to accelerate your next-gen technologies.

Plus, with zero lock-in, seamless integration, 24/7 support, and tailored solutions that are fully customizable—we’ll get you the infrastructure you need, sooner.

Contact us today if you’re ready to see the performance NVIDIA GPUs can bring to your organization.