A Guide to Evaluating Cloud Vendor Lock-In with Site Reliability Engineering

Adoption of cloud services from the Big 3—AWS, Azure and Google—has outpaced new platforms and smaller niche vendors, prompting evolving discussion around the threat of “lock-in.” Thorough evaluation using common tenets can help determine the risk of adopting a new cloud service.

Vendor lock-in is a service delivery method that makes a customer reliant on the vendor and limited third-party vendor partners. A critical aspect of vendor lock-in revolves around the risk a cloud service will inhibit the velocity of teams building upon cloud platforms. Vendor lock-in can immobilize a team, and changing vendors comes with substantial switching costs. That’s why even when a customer does commit to a vendor, they’re increasingly demanding the spend portability to switch infrastructure solutions within that vendor’s portfolio.

There are common traits and tradeoffs to cloud vendor lock-ins, but how the services are consumed by an organization or team dictates how risky a lock-in may be. Just like evaluating the risks and rewards of any critical infrastructure choice, the evaluation should be structured around the team’s principles to make it more likely that the selection and adoption of a cloud service will be successful.

The Ethos of Site Reliability Engineering

Site Reliability Engineering (SRE), as both an ethos and job role, has gained a following across startups and enterprises. While teams don’t have to strictly adhere to it as a framework, the lessons and approaches documented in Google’s Site Reliability Engineering book provide an incredible common language for the ownership and operation of production services in modern cloud infrastructure platforms.

The SRE ethos can provide significant guidance for the adoption of new platforms and infrastructure, particularly in its description of the Product Readiness Review (PRR). While the PRR as described in the Google SRE Book focuses on the onboarding of internally developed services for adoption of support by the SRE role, its steps and lessons can equally apply to an externally provided service.

The SRE ethos also provides a shared language for understanding the principles of configuration and maintenance as code, or at least automation. If “Infrastructure as Code” is an operational tenant of running production systems on Cloud platforms, then a code “commit” can be thought of as a logical unit of change required to enact an operational change to a production or planned service. The number of commits required to enact adoption of a service can be considered a measurement of the amount of actions required to adopt a given cloud service, functionally representing a measurement of lock-in required for adoption of the cloud service.

Combining the principles or SRE and its PRR model with the measurement of commits is a relatively simple and transparent way to evaluate the degrees of lock-in adoption the organization and team will be exposed to during adoption of a cloud service.

Eliminating toil, a key tenet of SRE, should also be considered. Toil is a necessary but undesirable artifact of managing and supporting production infrastructure and has real cost to deployment and ongoing operations. Any evaluation or readiness review of a service must stick to the tenants of the SRE framework and acknowledge toil. If not, the reality of supporting that service will not be realized and may prevent a successful adoption.

Putting Site Reliability Engineering and Product Readiness Review to Work

Let’s create a hypothetical organization composed of engineers looking to release a new SaaS product feature as a set of newly developed services. The team is part of an engineering organization that has a relatively modern and capable toolset:

- Systems infrastructure is provisioned and managed by Change Management backed by version control

- Application Services are developed and deployed as containers

- CI/CD is trusted and used by the organization to manage code release lifecycles

- Everything and anything can be instrumented for monitoring and on-call alarms are sane

- All underlying infrastructure is “reliable,” and all data sets are “protected” according to their requirements

The team can deploy the new application feature as a set of services through its traditional toolset. But this release represents a unique opportunity to evaluate new infrastructure platforms that may potentially leverage new features or paradigms of that platform. For example, a hosted container service might simplify horizontal scaling or improve self-healing behaviors over the existing toolsets and infrastructure, and because the new services are greenfield, they can be developed to best fit the platform of choice.

However, the adoption of a new platform exposes the organization to substantial risk, both from the inevitable growing pains in adopting any new service, and from exposing the organization to unforeseen and unacceptable amounts of vendor lock-in given its tradeoffs. This necessitates a risk discovery process as part of the other operational concerns of the PRR.

Risk Discovery During a PRR

Using the SRE PRR model and code commits as a measurement of change, we should have everything we need to evaluate the viability of a service along with its exposure to lock-in. The PRR model can be used to discover and map specifics of the service to the SRE practices and principles.

Let’s again consider the hypothetical hosted container service that has unique and advantageous auto-scaling qualities. While it may simplify the operational burden of scaling, the new service presents a substantial new barrier to application delivery lifecycles.

Each of the following items represent commits discovered during a PRR of the container service:

- The in-place change management toolset may not have the library or features to provision and manage the new container service, and several commits may be required to augment change management to reflect that.

- More than just eliminating toil, those change management commits are required in order to include the new container service into a responsible part of the CI/CD pipeline.

- The new container service likely has new constraints for network security or API authorization and RBAC, which needs to be accounted for in order to minimize security risk to the organization. Representing these as infrastructure as code can require a non-trivial effort represented by more commits associated with the service.

- The new container service may have network boundaries that present a barrier to log aggregation or access to other internal services required as a dependency for the new feature. Circumventing the boundaries or mirroring dependencies will likely require commits to implement and can be critical to the tenet of minimizing toil.

- Persisting and accessing data in a reliable and performant way could be significantly more effort than any other task in the evaluation. The SRE book has a chapter on Data Integrity that is uniquely suited to cloud services, highlighting the amount of paradigms and effort required to make data available reliably in cloud services. Adoption of those paradigms and associated effort would have to be represented as commits to the overall adoption, continuing the exposure of risk.

- Monitoring and instrumenting, though often overlooked, are anchor tenants of Site Reliability Engineering. Monitoring of distributed systems comes with a steep learning curve and can present a significant risk to the rest of the organization if not appropriately architected and committed to the monitoring toolset.

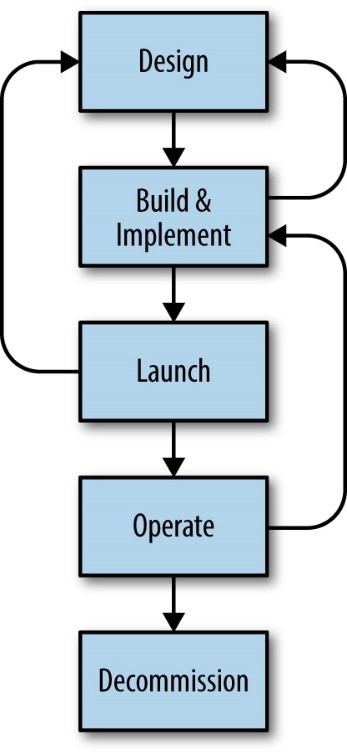

- Finally, how will the new service and infrastructure be decommissioned? No production service lives forever, and eventually a feature service will either be re-engineered or potentially removed. Not only does the team need to understand how to decommission the platform, but they must also have the tooling and procedures to remove sensitive data, de-couple dependencies or make other efforts that are likely to require commits to enact.

Takeaways from a PRR Using the SRE Framework

While certainly not a complete list of things to discover during a PRR, the list above highlights key areas where changes must be made to the organization’s existing toolset and infrastructure in order to accommodate the adoption of a new container hosting service. The engineering time, as measured by commits, can evaluate the amount of work required. Since the work is specific to the container service, it functionally represents measurable vendor lock-in.

Another hosting service with less elegant features may have APIs or tooling that are less effort for adoption, minimizing lock-in. Or the amount of potential lock-in is so significant that other solutions, such as a self-hosted container service, represent more value for less effort and lock-in risk than the adoption of an outside service. The evaluation can only be made by the team responsible for the decision. The right mode of evaluation, however, can make that decision a whole lot easier.