Month: February 2020

IT Pros Predict What Infrastructure and Data Centers Will Look Like by 2025

Predicting the future of tech is astonishingly hard. Human foresight is derailed by a host of cognitive biases that lead us to overreact to an exciting development or completely miss what in hindsight seems obvious (like these tech titans who scoffed at the iPhone’s introduction).

We still love to try our hands at prognosticating though—especially on trends and issues that hit close to home.

That’s why INAP surveyed 500 IT leaders and infrastructure managers about the near-term future of their profession and the industry landscape. Participants were asked to agree or disagree with the likelihood of eight predictions becoming reality by 2025.

The representative survey was conducted in U.S. and Canada among businesses with more than 100 employees and has a margin of error of +/- 5 percent.

Check out the results below, as well as some color commentary from INAP’s data center, cloud and network experts.

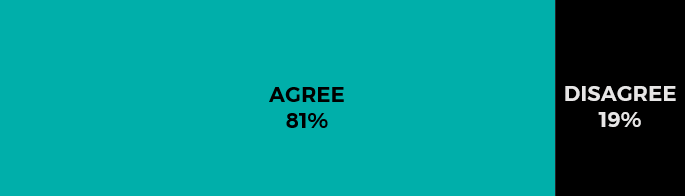

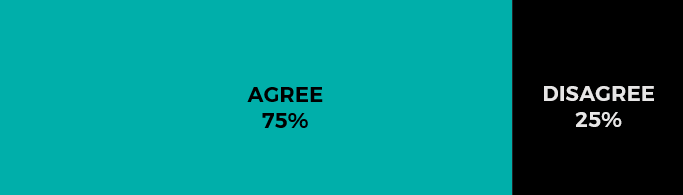

By 2025, due to the advancements of AI and machine learning, most common data center and network tasks will be completely automated.

The case for agree.

“For the most basic of tasks, technology advancements like AI, Machine Learning, workflow management and others are quickly rising to a place of ‘hands off’ for those currently managing these tasks,” said TJ Waldorf, CMO and Head of Inside Sales and Customer Success at INAP. “In five years, we’ll see the pace of these advancements increase and the value seen by IT leaders also increase.”

The case for disagree.

It’s difficult to say how many of data center and network advancements will truly be driven by artificial intelligence and machine learning as opposed to already-proven software defined automation. The market for technologies like AIOPs (Artificial Intelligence for IT Operations) is still nascent, despite rising interest. Regardless of their source, automation developments will benefit infrastructure and operations professionals, according to Waldorf.

“The reality is that these developments will give them time back to spend more time on business driving, revenue accelerating tasks,” he said. “The more we safely automate, the less risk of human caused errors which top the list of problems in the data center.”

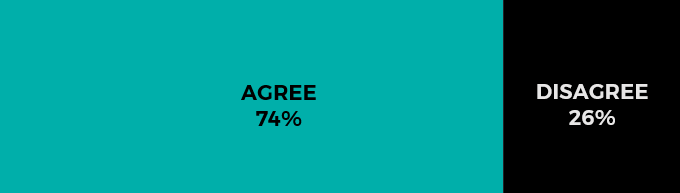

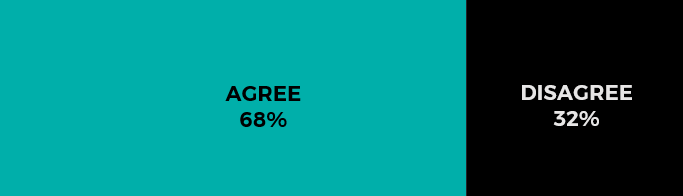

By 2025, on-premise data centers will be virtually non-existent.

The case for agree.

Workloads are leaving on-prem data centers for cloud and colocation at an incredible rate. In a study published late last year, we found that infrastructure managers anticipate a 38 percent reduction in on-premise workloads by 2022, driven by a need for greater network performance, application scalability and data center resiliency.

The case for disagree.

Interestingly, 48 percent of non-senior infrastructure managers surveyed disagree with the prediction. INAP’s Josh Williams, Vice President of Channel and Solutions Engineering, thinks this group will likely prove right, despite the current migration trends.

“Virtually non-existent is a bit of an overstatement,” said Williams. “The majority of workloads are still on prem today and it’s unlikely ‘virtually’ all of them will make it out for a variety of reasons. However, the trend is unmistakable: IT practitioners are abandoning data center management in huge numbers to help their applications perform and scale and allow them to focus on more than just keeping the lights on.”

By 2025, most applications will be deployed using “serverless” models.

The case for agree.

The introduction of AWS Lambda in 2014 made waves for its promise of deploying apps without any consideration to resource provisioning or server management. Adoption for serverless is growing, and as Microsoft and Google continue to develop their Amazon alternatives, we can expect more even organizations to test it out.

The case for disagree.

Notably, 43 percent of non-senior infrastructure managers disagree with this statement. INAP’s Jennifer Curry, Senior Vice President of Global Cloud Services, agrees with them:

“Serverless models have compelling use cases for ‘born in the cloud’ apps that have sporadic resource usage,” she said. “The tech, however, has a very long way to go before it’s the environment best suited for most workloads. The economics and performance calculus will favor other IaaS models for the foreseeable future, specifically for steady-state workloads and applications that require visibility for security and compliance.”

Curry also notes that serverless is still a new and somewhat nebulous term that’s often misused as a synonym for any cloud or IaaS service, which could be skewing the optimism. Most public cloud usage still involves compute and storage services that require time-intensive, hands on monitoring and resource management.

By 2025, virtually all companies will have a multicloud presence.

The case for agree.

“We’re already in a multicloud world,” said Curry, noting surveys that suggest wide-spread adoption at the enterprise level. “The more interesting question to me is: How many enterprises have a coherent multicloud strategy? Deploying in multiple environments is easy. Adopting a management and monitoring apparatus that mitigates vulnerabilities, ensures peak performance, and optimizes costs across infrastructure platforms is a challenge many enterprises struggle with.”

The case for disagree.

Outside of small businesses (who were not polled in this survey), INAP experts didn’t see a much of case for ‘disagree’ here. A certain percentage of businesses may attempt to achieve efficiencies by going all in on a single platform, but issues with lock-in and performance will likely deter that. Add SaaS platforms to your definition of multicloud, which our experts believe you should, and it’s hard to see anything but a multicloud world by 2025.

By 2025, due to increasing demands for hyper-low latency service, most enterprises will adopt “edge” networking strategies.

The case for agree.

“Depending on your definition of edge networking, this prediction is already on its way to being true,” said Williams. “An edge networking strategy is about reaching customers and end-users as quickly as possible. Whether it is achieved through geographically distributed cloud, CDN or network route optimizations, cutting latency will be a pre-requisite for the success of any mission-critical application.”

The case for disagree.

Waldorf echoes the notion that most companies will pursue latency-reduction in the coming years but suggests that just like the introduction of cloud in the mid-2000s, a full embrace of “edge” as an established concept may take longer.

“Edge use cases are still evolving,” he said. “The idea has been around a lot longer, but in the context of today’s IT landscape it’s only recently become something more leaders are starting to research and think about why it matters to them.”

By 2025, Chief Security Officers or Chief Information Security Officers will be considered the second most important role at most enterprises.

The case for agree.

Cybersecurity is among the most pressing challenges faced day in, day out, according to IT pros, and this is unlikely to change as attacks grow more intense and unpredictable. CSOs and CISOs are key to staying one step ahead of vulnerabilities and require the authority to make necessary investments.

The case for disagree.

“It makes sense IT pros would largely agree with this proposition, as security leaders, along with CIOs, will be responsible for managing extreme amounts of risk critical to revenue,” said Williams. “The issue with this prediction, however, is that the CSO’s role is typically only widely visible when things go very wrong. So it’s unlikely stakeholders internally or externally will view them second to the CEO, whether or not the distinction is deserved.”

By 2025, IT and product development teams at most companies will be fully integrated.

The case for agree.

In a 2018 survey, nearly 90 percent of IT infrastructure managers said they want to take a leading role in their company’s digital transformation initiatives. And that makes perfect sense. The success of any digital product or service ultimately depends just as much on its infrastructure performance as its coding, design and marketing. Integrating infrastructure operations with product teams could accelerate that goal.

The case for disagree.

Curry thinks integration may be the wrong goal, and that IT can grow its influence within organizations and lead digital transformation through stronger partnerships.

“IT teams will have more success focusing on alignment with product teams, as opposed to pursuing complex reorganizations,” said Curry. “Senior IT leadership will still need to make a strong case as to why they need to be at the table earlier rather than later in the product development lifecycle. We’re seeing many of our most successful customers achieve alignment, but it’s a process that can take time and patience.”

By 2025, despite technological development, the IT function will essentially look the same as it did in 2020.

The case for agree.

New tools and platforms can be implemented without changing the overall function of IT—e.g., infrastructure deployments and application delivery, preventing downtime, supporting end-users, etc.

The case for disagree.

With the decline of on-premise data centers and the rise of multicloud and hybrid platforms, the function of IT will inevitably evolve. As IT pros spend less time on routine infrastructure upkeep and maintenance, more time can be allocated to projects that drive innovation and efficiency. In INAP’s recent State of IT Infrastructure Management report, we got a preview of how IT teams would spend that time.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

It’s fitting that a city known for its cloudy days in home to two of the largest players in cloud computing. The Seattle data center market is growing, bolstered by local industry, Northern California-based expansions and cloud infrastructure investments from Microsoft and Amazon.

Home to coffee, technology and online retail conglomerates, Seattle’s other major industries include aerospace and health care. The burgeoning cloud market is fueled by relatively low power costs and connectivity options available to the cloud computing businesses in the city and surrounding suburbs. The area also experiences months of cool and rainy weather, allowing for free cooling for the data centers located here, a benefit for data center operators and tenants alike.

The demand in the Seattle data center market is high, with a new absorption rate of 25.3 MW. In 2019, the average size of deployments was on the rise, with many users requiring multiple megawatts plus room for expansion.

Considering Seattle for a colocation or network solution? There are several reasons why we’re confident you’ll call INAP your future partner in this competitive market.

INAP’s Flagship Seattle Data Centers

INAP, which was founded in Seattle in 1996, maintains two flagship facilities and a POP in the market. With our suite of Connectivity Solutions – Cloud Connect, Metro Connect and Network Connect, you can reliably link your infrastructure with endpoints in public clouds throughout Seattle and around the globe.

Connect to Chicago and Silicon Valley via INAP’s reliable, high-performing backbone, and benefit from Metro Connect fiber that enables highly available connectivity within the region via our ethernet rings. Paired with Performance IP®, INAP’s patented route optimization software, customers in our Seattle flagships get the application availability and performance their customers demand.

Here are the average latency benchmarks on the backbone connection from Seattle:

- Chicago: 49.2 ms

- Silicon Valley: 19.0 ms

Our facilities in Seattle offer over 101,500 square feet of space, with 57,000 of square feet of raised flooring. NOC and onsite engineers with remote hands are available 24/7/365.

Download the overview of INAP’s presence in Seattle here [PDF]

140 4th Avenue

This Tier 3, carrier neutral, SOC 2 Type II facility in downtown Seattle offers interconnectivity via high-performing direct backbone connections to Chicago and Silicon Valley on INAP’s high-capacity private network.

- Space: 40,000 square feet

- Power:3 MW

- Network: Performance IP® Mix—AT&T, Cogent Communications, NTT, Zayo, Comcast, Verizon, INAP

Download the spec sheet on this downtown Seattle flagship data center here [PDF]

3355 120th Place

Our Tier 3, carrier neutral, SOC 2 Type II facility located just outside of downtown Seattle is concurrently maintainable, energy efficient and supports high power-density environments of 20+ kW per rack.

- Space: 65,000 square feet

- Power:6 MW

- Network: Performance IP® Mix—AT&T, Cogent, NTT, Zayo, Comcast, Verizon, INAP

Download the spec sheet on this Seattle flagship data center here [PDF]

Spend Portability Appeals for Future-Proofing Infrastructure

Organizations need the ability to be agile as their needs change. With INAP Interchange, INAP Seattle data center customers need not worry about getting locked into a long-term infrastructure solution that might not be the right fit years down the road.

The program allows customers to exchange infrastructure environments a year (or later) into their contract so that they can focus on current-state IT needs while knowing they will be able to adapt for future-state realities. For example, should you choose a solution in a data center in the Silicon Valley, but find over time that you need to be closer to a user base in Phoenix, you can easily make the shift.

Colocation, Bare Metal and Private Cloud solutions are eligible for Interchange.

Learn more about INAP Interchange by downloading the FAQ.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

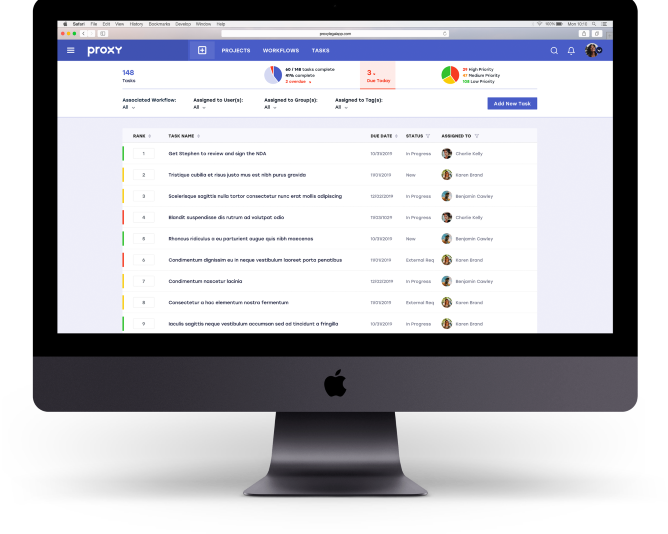

INAP’s New Strategic Alliance Showcases Benefits of Private Cloud for Legal Tech

We’re pleased to share that NMBL Technologies LLC (pronounced “nimble”) today announced it will offer its legal workflow management solution, Proxy, in a secure private cloud environment managed by INAP.

The partnership strengthens INAP’s foothold in the legal services industry, and bolsters Proxy’s position as a secure legal technology solution that businesses can trust with their most sensitive data.

“While cloud adoption in the legal services industry has improved recently, many corporate legal departments remain wary of moving to a public cloud provider,” said Daniel Farris, a NMBL founder and the company’s so-called Chief Man in Suit. “Leveraging INAP’s secure private cloud environment further bolsters Proxy’s position as a leader in secure legal technology.”

INAP’s hosted private cloud solution is offered as a logically isolated or fully dedicated private environment ideal for mission-critical applications with strict compliance standards and performance requirements.

“INAP is already a trusted provider of cloud services in highly regulated industries, including healthcare, financial services and ecommerce, where stringent standards like HIPAA and PCI dictate how infrastructure and applications are secured and managed,” said T.J. Waldorf, INAP’s Chief Marketing Officer. “When we looked at the legal services market, we could see that it was underserved, and very much in need of providers who understand the unique issues lawyers face when adopting and implementing new technology. This alliance is a natural fit for us, bringing together our platform with a workflow solution tailor-made for corporate legal departments.”

“Inefficiencies brought about by antiquated processes, lack of adequate controls, poor workflow management and collaboration tools, and the generally ineffective use of technology plague the legal market,” said Farris. “Corporate legal departments feel the effects more acutely than most; they’re often trying to manage their work with solutions that weren’t built for their needs.”

“In-house lawyers are under greater pressure than most other business managers to protect data and documents,” agreed Rich Diegnan, INAP’s Executive Vice President and General Counsel. “In fact, lawyers in many states have an ethical obligation to understand and ensure the security of the legal technology they use. Implementing already-secure platforms like Proxy in INAP’s secure private cloud environment allows in-house lawyers like me to migrate to the cloud with confidence.”

NMBL and INAP have a common perspective on their work, viewing the management of legal work performed within companies by in-house counsel as the most significant opportunity in legal tech.

“NMBL is embracing the legal ops movement, viewing in-house legal departments as business units, and developing enterprise solutions like Proxy for the corporate legal ecosystem,” said Christopher Hines, NMBL’s so-called Uber Geek. “Partnering with INAP is a no-brainer for us—their secure IaaS solution is yet another differentiator that creates competitive advantage for Proxy.”

“Seventy-five percent of all U.S. legal work is now performed by in-house counsel, but the majority of legal technology products and solutions are still targeted at firm lawyers,” said Nicole Poulos, NMBL’s Marketing Maven. “Combining legal ops, workflow management, and a secure cloud platform powered by INAP, will provide corporate legal departments with greater control of their legal function, improve efficiency, and provide actionable data and intelligence.”

The two companies also share a geographic connection. INAP’s cloud services expansion has been heavily influenced by the Chicago-based personnel brought on with the company’s acquisition of managed hosting provider SingleHop in 2018. Also based in Chicago, NMBL was recently named a Finalist in the American Bar Association’s Startup Pitch Competition at TECHSHOW 2020, taking place in Chicago in February.

“The Chicago tech scene has generally been more focused on enterprise and B2B solutions,” said Farris. “INAP and NMBL are both dedicated to helping companies be more productive and efficient by enabling the adoption of strong technology suites.”

“Cloud adoption in the legal market still trails other sectors significantly,” said Jennifer Curry, INAP’s SVP of Global Cloud Services. “The most-cited reason is concern about privacy and data security. INAP’s secure private cloud solutions make a lot of sense for an industry focused on not only ensuring the privacy and security of information, but also on faster, better, more effective ways to manage legal tasks and documents.”

Both companies focus on providing flexible, lightweight solutions to customers in regulated industries.

“INAP is always looking to establish alliances with disruptive tech companies,” said Waldorf. “Our scalable, reliable and secure platform offers best-in-class architecture with all the convenience and power of any well-established cloud provider. NMBL wants to leverage INAP’s platform to continue to disrupt an industry ripe for technology-driven change.”

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

The Most Overhyped Tech Trends and Buzzwords of 2020

Tech trends impacting IT departments evolve at a break-neck pace. Whether they are expected or not, this reality forces IT pros to quickly distinguish which trends are relevant and which can be treated with skepticism.

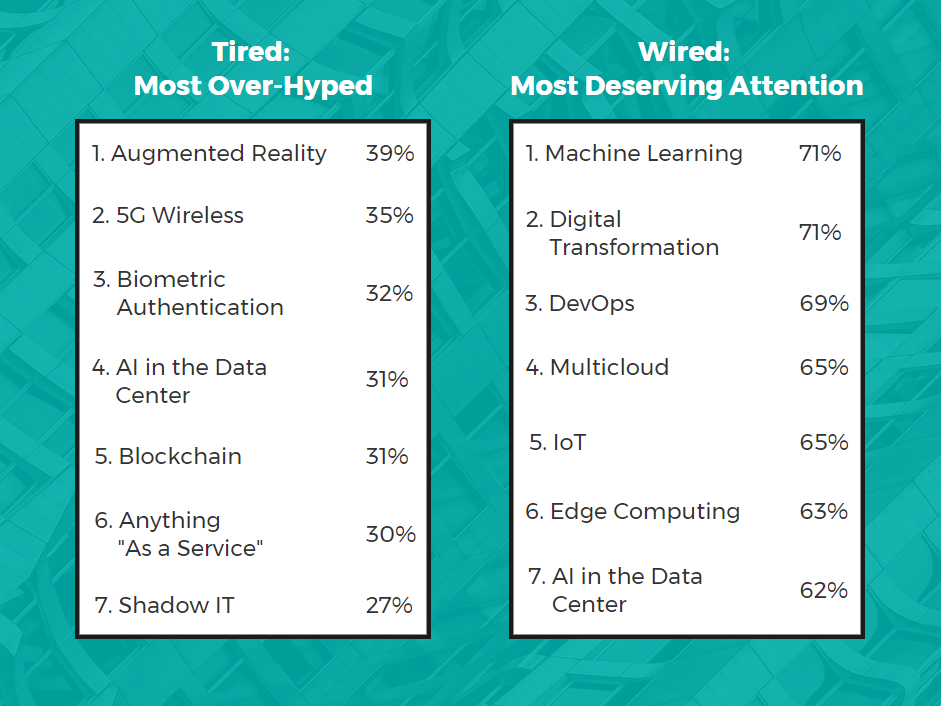

To learn what tech trends really deserve attention in the coming year, we presented 500 senior IT professionals and infrastructure managers a list of general tech and IT buzzwords and asked whether these trends will be overhyped or noteworthy in 2020.

Take a look at the results:

Trends IT Pros Deemed as Over-Hyped

None of the buzzwords/trends broke 50 percent in the over-hyped column. While there wasn’t a broad consensus, augmented reality (AR), 5G wireless, biometric authentication, blockchain and AI in the data center claimed the top five spots in this category.

Augmented Reality

AR ranked highest on the list of over-hyped trends at 39 percent, but the majority agree that it deserves attention (51 percent). Why the disparity? It may depend on whether or not AR has practical applications for the IT pro’s industry or business.

There are plenty of examples of AR being incorporated across a variety of use cases. While it more prominently (and visibly) has to capture the imagination of the public, AR has also worked its way into the data center. As this technology continues to develop, a greater majority of IT pros might come to view AR as something worth giving attention.

5G Wireless

This next frontier in wireless may be a popular topic of discussion, but 35 percent of IT pros think it will be overhyped in 2020. Mary Jane Horne, INAP’s SVP of Global Network Services, weighed in on the on the 5G buzz at CAPRE’s seventh annual Mid-Atlantic Data Center conference in September 2019.

“Just because I have a region with 5G, doesn’t meant that that particular provider is going to get it to the destination the fastest,” said Horne. “I need to have a variety of providers I can pick from. 5G is only going to be fast as the background network.”

CNN Business notes that most consumers won’t be using 5G technology until 2025. At the enterprise level, conversation on 5G will be more relevant when upstream network infrastructure is better equipped to handle it.

Biometric Authentication

While it may come as a surprise that 1 in 3 IT pros view this proven, cutting-edge branch of security technology as over-hyped, it may not be practical for every business or IT team.

Biometric authentication is another layer of protection on top of a strong security system, and it can frequently apply to specific locations, such as data centers or other high-security facilities. However, for day-to-day user access, it may not hold as much of a practical application as implementing two-factor authentication.

What Tech Trends Will Earn IT’s Attention in 2020?

Participants showed greater consensus on what is really deserving of attention in 2020, with 71 percent of pros agreeing on the top buzzwords—machine learning and digital transformation. DevOps and multicloud rounded out the top four, with more than 65 percent of IT pros saying these trends deserve attention.

Multicloud

Multicloud strategies continue to gain traction as we launch into 2020. The model gives companies the flexibility to pick the right environments that allow their workloads to run optimally.

“The adoption of multicloud by enterprises spurs the innovation of tools to monitor and manage across platforms and increases the value of the role MSPs play in partnering to design and manage these complex scenarios,” says Jennifer Curry, SVP of Global Cloud Services.

She continues, “The traditional division of dev and test in public cloud and product on prem or in a private cloud will go by the wayside as multicloud makes it easier to optimize your environment without sacrificing performance, security or visibility.”

We can definitely expect to hear much more on multicloud services over the coming years.

Digital Transformation and DevOps

Seventy-one percent of IT pros deem digital transformation to be worth attention. While some may think the term has become a bit “nebulous from overuse,” the concept itself is popular because companies across industries are adopting new technology that changes the way business itself is done.

DevOps is tied into digital transformation, with software developers and IT teams working in closer collaboration to more rapidly build, test and release new software. With these silos eliminated, processes can be automated, giving IT pros the ability to solve critical issues faster.

Senior leaders are significantly more likely to think DevOps deserves attention than non-senior IT infrastructure managers, with a 21 versus 11 percent split, respectively, As leaders for their respective areas, it makes sense that fostering a DevOps culture/philosophy is appealing, especially if it means that work can be done with greater speed, agility and results.

Machine Learning

Considering machine learning makes it possible for a computer to efficiently process large amounts of information and make adjustments accordingly, it’s no wonder that 71 percent of participants said this technology deserves attention. Paired with AI, machine learning can be even more effective. At present, the growing amount and variety of data necessitates this technology to deliver faster and more accurate results.

The cost effectiveness of machine learning will likely lead to shifting functions in tech roles. As accurate and efficient as machine learning technology is, time and resources are still needed to train it properly. IT pros will need to stay on top of the latest education and skill building as their career paths evolve.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

In a world where advanced cyberattacks are increasing in frequency and causing progressively higher costs for affected organizations, security is of the utmost importance no matter what infrastructure strategy your organization chooses. Despite longstanding myths, cloud environments are not inherently less secure than on-premise. With so many people migrating workloads to the cloud, however, it’s important to be aware of the threat landscape.

Ten million cybersecurity attacks are reported to the Pentagon every day. In 2018, the number of records stolen or leaked from public cloud storage due to poor configuration totaled 70 million. And it’s estimated that the global cost of cybercrime by the end of 2019 will total $2 trillion.

In response to the new cybersecurity reality, it is estimated that the annual spending on cloud security tools by 2023 will total $12.6 billion.

Below, we’ll cover six ways to secure your cloud. This list is by no means exhaustive, but it will give you an idea of the security considerations that should be considered.

Mitigating Cybersecurity Threats with Cloud Security Systems and Tools

1. Intrusion Detection and 2. Intrusion Prevention Systems

Intrusion detection systems (IDS) and intrusion prevention systems (IPS) are other important tools for ensuring your cloud environment is secure. These systems actively monitor the cloud network and systems for malevolent action and rule abuses. The action or rule may be reported directly to your administration team or collected and sent via a secure channel to an information management solution.

IDSs have a known threat database that monitors all activity by users and the devices in your cloud environment to immediately spot threats such as SQL injection techniques, known malware worms with defined signatures and invalid secure certificates.

IPS devices work at different layers and are often features of next-generation firewalls. These solutions are known for real-time deep packet inspection that alerts to potential threat behaviors. Sometimes these behaviors may be false alarms but are still important for learning what is and what is not a threat for your cloud environment.

3. Isolating Your Cloud Environment for Various Users

As you consider migrating to the cloud, understand how your provider will isolate your environment. In a multi-tenant cloud, with many organizations using the same technology resources (i.e. multi-tenant storage), you have segmented environments using vLANs and firewalls configured for least access. Any-any rules are the curse of all networks and are the first thing to look for when investigating the firewall rules. Much like leaving your front door wide-open all day and night, this firewall rule is an open policy of allowing traffic from any source to any destination over any port. A good rule of thumb is to block all ports and networks and then work up from there, testing each application and environment in a thorough manner. This may seem time consuming but going through a checklist of ports and connection scenarios from the setup is more efficient then doing the work of opening ports and allowing networks later.

It’s also important to remember that while the provider owns the security of the cloud, customers own the security of their environments in the cloud. Assess tools and partners that allow you take better control. For instance, powerful tools such as VMware’s NSX support unified security policies and provide one place to manage firewall rules with its automation capabilities.

4. User Entity Behavior Analytics

Modern threat analysis employs User Entity Behavior Analytics (UEBA) and is invaluable to your organization in mitigating compromises of your cloud software. Through a machine learning model, UEBA analyzes data from reports and logs, different types of threat data and more to discern whether certain activities are a cyberattack.

UEBA detects anomalies in the behavior patterns of your organization’s members, consultants and vendors. For example, the user account for a manager in the finance department would be flagged if it is downloading files from different parts of the world at different times of the day or is editing files from multiple time zones at the same time. In some instances, this might be legitimate behavior for this user, but the IT director should still give due diligence when the UEBA outs out this type of alert. A quick call to confirm the behavior can prevent data loss or the loss of millions of dollars in revenue if the cloud environment has indeed been compromised.

5. Role-Based Access Control

All access should be given with caution and on an as-needed basis. Role-based access control (RBAC) allows employees to access only the information that allows them to do their jobs, restricting network access accordingly. RBAC tools allow you to designate what role the user plays—administrator, specialist, accountant, etc.—and add them to various groups. Permissions will change depending on user role and group membership. This is particularly useful for DevOps organizations where certain developers may need more access than others, as well as to specific cloud environments, but not others.

When shifting to a RBAC, document the changes and specific user roles so that it can be put into a written policy. As you define the user roles, have conversations with employees to understand what they do. And be sure to communicate why implementing RBAC is good for the company. It not only helps you secure your company’s data and applications by managing employees, but third-party vendors, as well.

6. Assess Third Party Risks

As you transition to a cloud environment, vendor access should also be considered. Each vendor should have unique access rights and access control lists (ACL) in place that are native to the environments they connect from. Always remember that third party risk equates to enterprise risk. Infamous data breach incidents (remember Target in late 2013?) resulting from hackers’ infiltration of an enterprise via a third-party vendor should be enough of a warning to call into question how much you know about your vendors and the security controls they have in place. Third party risk management is considered a top priority for cybersecurity programs at a number of enterprises. Customers will not view your vendor as a separate company from your own in the event that something goes sideways and the information goes public. Protect your company’s reputation by protecting it from third party risks.

Parting Thoughts

The above tools are just several resources for ensuring your cloud environment is secure in multi-tenant or private cloud situations. As you consider the options for your cloud implementation, working with a trusted partner is a great way to meet your unique needs for your specific cloud environment.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

3 Tips for Making Sure Your Brand’s Website Is Ready on Super Bowl Sunday

Editor’s note: This article was originally published Jan. 31, 2020 on Adweek.com.

There’s a hidden cost to even the most successful, buzz-generating Super Bowl ads: All that hard-earned (and expensively acquired) attention can easily bring a website to a standstill or break it altogether.

We’re 12-plus years into the “second screen” era, and websites and applications during the Big Game are still frequently overwhelmed by the influx of visitors eagerly answering calls to action. Last year it was the CBS service streaming the game itself that failed to stay fully operational. In 2017, it was a lumber company taking a polarizing post-election stand. Advertisers in 2016’s game collectively witnessed website load time increases of 38%, with one retail tech company’s page crawling at 10-plus seconds.

We pay a lot of attention to the ballooning per-second costs of these prized spots. We need to make more of a fuss around the opportunity costs of sites that buckle under the pressure of their brand’s own success.

Note that a mere tenth of second slowdown on a website can take a heavy toll on conversion rates. Any length of time beyond that will send viewers back to their Twitter feeds. For first-time advertisers, this is an audience they may never see again. For big consumer brands, the expected hype can pivot quickly to reputation damage control. For ecommerce brands, downtime is a disaster that could mean millions in lost revenue.

This year’s game may or may not yield another showcase example, but there’s a lesson here for marketers for brands of all sizes. Align your planned or unplanned viral triumphs with a tech infrastructure capable of rising to the occasion.

To do so, lets briefly address two reasons why gaffes like this happen. The first, lightly technical explanation is that crashes and overloads occur when the number of requests and connections made by visitors outweigh the resources allocated to the website’s servers. The second, much-less-technical explanation is because executives didn’t sit down with their IT team early enough (or at all) to prevent explanation one.

So, in that spirit of friendly interdepartmental alignment, here are a few pointers:

Focus on the Site’s Purpose

What’s the ideal user experience for those fleeting moments you hold a visitor’s attention? Answering this simple question will help your IT partners think holistically, identify potential bottlenecks in the system and allocate the right amount of resources to your web infrastructure.

For instance, if you’re driving viewers to a video, your outbound bandwidth will need to pack a punch. If you’re an ecommerce site processing a high volume of transactions concurrently, you’ll need a lot of computer power and memory to handle dynamic requests. Image-heavy web assets may need compression tools. In any instance, your IT team will need to be ready with scalable contingencies. It’s why we see more enterprises adopting sophisticated multi-cloud and networking strategies that ensure key assets remain online through the peaks and valleys.

Have the Cybersecurity Talk

Mass publicity could very well make your website a target for bad actors. It’s the simple reality in an economy that’s increasingly digital. Ensure information security experts probe your site for vulnerabilities prior to major campaigns. Similarly, ask your IT team if your network can fend off denial of service attacks in which malicious actors send a deluge of fake traffic to your servers for the sole purpose of taking you offline. While these attacks are increasingly powerful and prevalent, gains in automation and machine learning mean they can be mitigated with the right tools.

Don’t Forget the Dress Rehearsal

If you’re planning a major campaign or your business is prone to seasonal traffic spikes, request that your tech partners run load tests. You’ll see firsthand what happens to your site performance when, for instance, your social team’s meme game finally strikes gold.

Ultimately, website performance should be a 24/7 consideration. Ask your IT team about their monitoring tools in place and, more importantly, the processes and people at the ready to take any necessary action.

Here’s hoping Sunday’s advertisers don’t squander their 15 minutes of fame with a 15-second page load. But if history does repeat itself, use it as fuel to ensure it doesn’t happen to you.