Month: June 2013

Big data is all the rage these days, and the hype meter is revving at an all-time high. But getting caught up in the hype can be a bit dangerous for your big data applications if you don’t consider how to get the highest disk I/O. Without the right infrastructure in place, you may be disappointed in your application performance.

Many people assume that cloud means virtualization, but we don’t think that’s necessarily true. At Internap, we believe cloud can also be bare-metal. This means you do not run a hypervisor, and it’s not virtualized – it’s just a bare-metal server with an operating system, but delivered on a cloud-like service model.

This means you can spin a server, or hundreds of them, up in a matter of minutes and throw them away once you’re done with your application or after your workload is complete. We’ve basically taken the service delivery model of the cloud and applied it to non-virtualized servers.

Why is this interesting for big data? The answer is disk I/O. When you look at solutions like Hadoop or any sort of big data application, disk I/O is the number one enemy. If you can solve that problem and give your application good disk I/O, your big data application is going to run circles around those deployed on a virtualized cloud. Bare-metal cloud, meaning no hypervisor, operating system only is a great match for big data, and Internap is one of the few providers who can offer a non-virtualized cloud environment.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

While many IT decision makers are familiar with how Software as a Service (SaaS) can benefit their organization, PaaS (Platform as a Service) has become equally as popular. Add IaaS (Infrastructure as a Service) to the mix, and it often raises questions – how do you know which one is best for your needs, and how do these cloud services work together? When building an application, you can choose any combination of services that allow you the greatest combination of flexibility and resource control to meet your requirements.

IaaS

Think of IaaS, PaaS and SaaS as “stacks” that can either reduce the level of effort required to build your application, or provide more flexibility.

For example, IaaS provides raw infrastructure-like compute and storage resources, providing the most flexibility while requiring the most hands-on management. Developers interact with the operating systems directly, build/use their own frameworks and consume infrastructure resources directly.

The image below shows the relationship of your application to the services provided by the IaaS provider.

PaaS

PaaS, on the other hand, will provide a development platform (as a service) to create new applications. Think of it as a cloud-based middleware: it provides services beyond the raw operating system and abstracts the underlying resources from you. The developer doesn’t have to manage compute resources; instead she gets to build on frameworks specifically provided for her application. For small dev teams without infrastructure management capabilities, this is a great way to reduce the “time-to-usefulness” of their project, but it restricts the flexibility since they must work within the frameworks provided.

PaaS services typically use an underlying IaaS service; e.g., Acquia (Drupal platform as a service) and Heroku (Ruby on Rails platform as a service) both use Amazon AWS as a foundation to their platforms. As an IaaS provider, Internap has similar customers that provide a Platform-as-a-Service for developers who want to develop code against a managed framework rather than manage the frameworks themselves.

The following example illustrates what a PaaS provider might offer to your application.

Tune in next time as we discuss how SaaS fits into the cloud infrastructure landscape, and how using a combination of these services can provide you with the desired level of control and flexibility for your applications.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

An Open Letter to IT Departments:

I have a confession to make: in the past*, I’ve procured cloud services without your approval. I’ve used the cloud for file sharing, storage, project management and collaboration services and, at any given moment, I had at least four active subscriptions to cloud services that I used for business purposes. More often than not, you didn’t even know about any of them.

Was I purposely circumventing you as a peculiar act of defiance or intentionally compromising enterprise security? Of course not. I was just trying to get my job done as efficiently as possible. With tight deadlines, high project volume and lofty campaign goals, I needed the agility that the cloud provides. To be honest, I didn’t have the time to create a business case for these services and wait for your approval – especially when you’re so busy running day-to-day infrastructure operations and handling high-priority requests from other areas of the business.

I was unknowingly a part of the phenomenon known as Shadow IT – using hardware or software not supported by an organization’s IT department. And I’m not alone; Gartner predicts that 35% of enterprise IT expenditures will happen outside of the corporate IT budget by 2015 and the CMO will spend more on IT than the CIO by 2017.

At this point, you may be wondering what prompted me to confess these transgressions (on my current employer’s website, no less). On our recent webinar Hybridization: Shattering Silos Between Cloud and Colocation, my colleague Adam Weissmuller spoke about Shadow IT and how cloud’s accessibility and immediacy to the end user can often come at the expense of IT security and control. So, beyond letting you know that (a) the concept of Shadow IT is real, (b) it’s likely happening in your organization more than you realize and (c) I’m sorry for putting your control measures and security at risk, I wanted to share with you Adam’s suggestion for bringing Shadow IT back into the fold.

After identifying Marketing as one of the most notorious Shadow IT offenders, Adam illustrated how a cloud and colocation hybridized environment could enable quick, on-demand provisioning of additional server capacity for an upcoming marketing campaign. This type of infrastructure would enable IT to provide assets on demand, without capital outlay, and under its controls, while marketing can run their campaign on time without compromising enterprise security. You can listen to the full webinar recording here for more details, as well as other hybridization use cases: Hybridization: Shattering Silos Between Cloud and Colocation.

Thanks for reading and letting me shine the light on Shadow IT.

*Note that I have never and will never engage in such reckless behavior at Internap.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

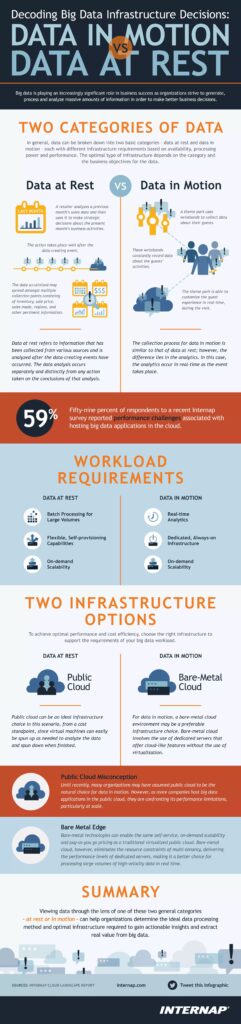

Data in Motion vs. Data at Rest

Gaining insights from big data is no small task. Having the right technology in place to collect, manage and analyze data for predictive purposes or real-time insight is critical. Different types of data may require different computing platforms to provide meaningful insights. Understanding the difference between data in motion vs. data at rest can help determine the type of technology and processing capabilities required to glean insights from the data.

What is data at rest?

This refers to data that has been collected from various sources and is then analyzed after the event occurs. The point where the data is analyzed and the point where action is taken on it occur at two separate times. For example, a retailer analyzes a previous month’s sales data and uses it to make strategic decisions about the present month’s business activities.

The action takes place after the data-creating event has occurred. This data is meaningful to the retailer, and allows them to create marketing campaigns and send customized coupons based on customer purchasing behavior and other variables. While the data provides value, the business impact is dependent on the customer coming back in the store to take advantage of the offers.

What is data in motion?

The collection process for data in motion is similar to that of data at rest; however, the difference lies in the analytics. In this case, the analytics occur in real-time as the event happens. An example here would be a theme park that uses wristbands to collect data about their guests.

These wristbands would constantly record data about the guest’s activities, and the park could use this information to personalize the guest visit with special surprises or suggested activities based on their behavior. This allows the business to customize the guest experience during the visit. Organizations have a tremendous opportunity to improve business results in these scenarios.

Infrastructure for data processing

You might be wondering what type of IT Infrastructure would be needed to support data processing for both of these types. The answer depends on which method you choose, and your business objectives for the data.

For data at rest, a batch processing method would be most likely. In this case, you could spin up a bare-metal server during the time you need to analyze the data and shut it back down when you are done. With no need for “always on” infrastructure, this approach provides access to high-performance processing capabilities as needed.

For data in motion, you’d want to utilize a real-time processing method. In this case, latency becomes a key consideration because a lag in processing could result in a missed opportunity to improve business results. By eliminating the resource constraints of multi-tenancy, bare-metal cloud offers reduced latency and high performance levels, making it a good choice for processing large volumes of high-velocity data in real time.

Both types of data have their advantages, and can provide meaningful insights for your business. Determining the right processing method and infrastructure depends on the requirements for your specific use case and data strategy.

Learn more about the benefits of bare-metal cloud for different types of big data workloads.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Hasta la vista, CAPEX: Bare metal servers offer high performance, lower costs

What in the world is a bare-metal server? Sounds like something out of Terminator II, right? Well, good news for all of us, it’s not a movie prop or character, but rather a real-world fit to a new age problem.

When Should You Consider Bare-Metal Instead of Other Options?

What if you want to avoid CAPEX (purchasing, maintaining, servicing, depreciating, etc.) and you need a dedicated computing resource for your project? Let’s say it’s a big data type of project, and your client (or boss) demands it’s in a secure environment to protect their valuable information. Not only that, you need really fast disk access and consistent IOPS. Well, those requirements can rule out public cloud infrastructure for some buyers.

Colocation for a temporary project doesn’t make sense, and clearly we would incur significant CAPEX and turnaround time, which precludes this option anyway. Long term custom hosting is a good fit, but in some situations it can be a little like killing a mosquito with a cannonball. Not exactly the best use of our money and a much longer-term commitment than we would need.

How Does the Bare-Metal Option Work?

Enter bare-metal servers. Bare-metal servers are dedicated, provisioned on demand physical hardware. A technician is going to physically connect a server to the network at their MTDC for you. The “bare metal” portion really speaks to the “bare bones” that comes along with the server. By design, it comes to you as a clean slate. Most vendors will allow you to select different core sizes, memory, processing speeds and OS—but that’s it. It’s yours to do with what you like. They’re also easily horizontally scalable allowing you to ramp up for your bigger projects.

What Makes Bare-Metal Servers So Appealing?

The “gee whiz” to all of this is two-fold: first, some companies are able to provision a new bare metal server for you within a couple of hours. That’s more than enough time for you to stream Terminator II, laugh at the bad acting, grab a cup of coffee and sit back down at your desk. Boom, it’s ready for you. Second, it’s billed like a cloud service—meaning that for certain configs there are often no minimums past the first hour.

Why Do Bare-Metal Servers Stand Out?

So there you have it. A secure, dedicated environment allowing for higher IOPS, customized as you like, up in a couple of hours and gone again at the click of a button. Once you’ve used bare-metal servers, we’re guessing “you’ll be back”.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Industry news: cloud and colocation services offer data protection and security

Whether you’re trying to protect your data from a natural disaster, or concerned about meeting compliance or regulatory requirements for data storage, colocation and cloud services can provide peace of mind for your business. While there are many reasons to take advantage of colocation services, improved security and data protection can be some of the most important.

Below is a collection of articles to provide insight into the security capabilities of colocation and cloud services.

NIST releases cloud security documentation

The National Institute of Standards and Technology recently revealed a new standard document designed to accelerate cloud adoption in government settings. The guidelines are focused on helping public sector organizations establish cloud computing use models that are secure enough to meet stringent government requirements.

Colocation hosting offers value as a data protection strategy

Colocation providers offer remote storage, network security, firewalls and physical protections for your data. Access to your servers can be better controlled in a secure data center than in most office buildings. Colocation services can also help control access to your internal networks, making sure that only those who are authorized can access confidential data and company information.

Active Hurricane season predicted — colocation can be an asset

Colocation providers that offer complete infrastructure redundancy help minimize the risk of data loss in the event of a disaster. Data centers with reliable N+1 design and concurrent maintainability, such as Internap’s New York Metro data center, can protect against outages. When evaluating data center providers, make sure their facilities have the right infrastructure design and preventative maintenance in place so that your data and equipment are protected if disaster strikes. Since natural disasters are unpredictable, no data center can guarantee that your servers won’t go down, but data center facilities and colocation services can be a key part of your disaster recovery strategy.

Keep security in mind when choosing a cloud provider

The public cloud created security concerns initially, because multiple organizations were sharing resources from the same cluster of servers. If one company experienced a breach within its virtual machine, it was possible that other organizations sharing the same resources could also be affected. However, with dedicated private cloud options and secure networks, cloud providers can successfully protect data and avert threats.

For up-to-date information on IT industry news and trends, check out Internap’s Industry News section.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

CCP Games achieves high availability with Internap’s Flow Control Platform (FCP)

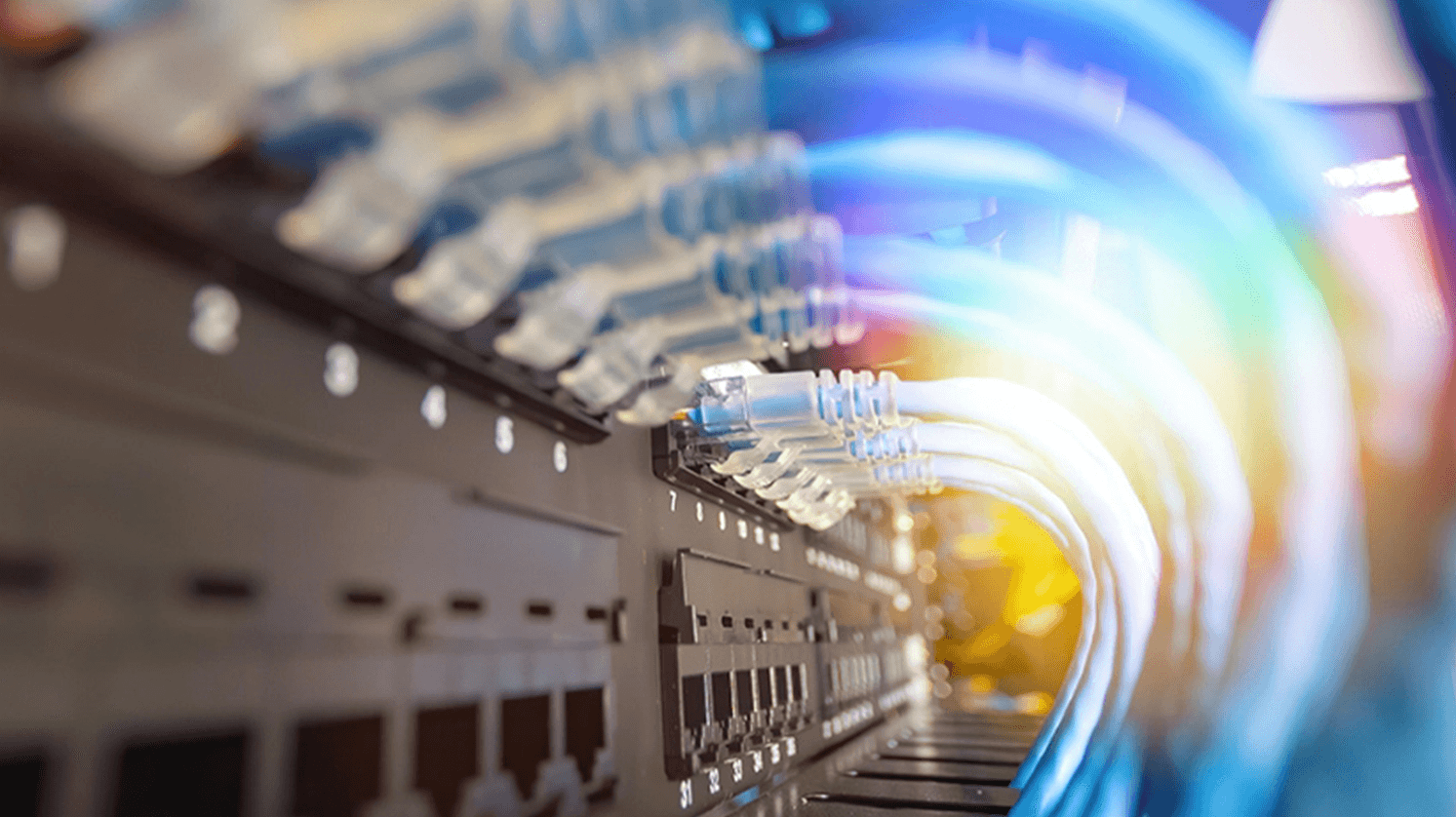

Gaming companies that develop massively multiplayer online games (MMOG) require highly reliable connectivity for a consistent gaming experience. To meet consumer expectations and avoid outages, game developers must have an IT Infrastructure in place that allows them to assess network availability and performance across multiple carriers. The ability to deliver your game across the optimal network path helps reduce the risk of disruptions and slowdowns that would otherwise result in lost gaming subscribers and lost revenue.

Gaming companies that develop massively multiplayer online games (MMOG) require highly reliable connectivity for a consistent gaming experience. To meet consumer expectations and avoid outages, game developers must have an IT Infrastructure in place that allows them to assess network availability and performance across multiple carriers. The ability to deliver your game across the optimal network path helps reduce the risk of disruptions and slowdowns that would otherwise result in lost gaming subscribers and lost revenue.

CCP Games is one of the leading companies in the field of massively multiplayer online gaming. But when subscribers began reporting issues with inconsistent performance and outages during game play, CCP Games needed a way to quickly identify and resolve network problems. With a large global subscriber base and game play that involves integrated voice and chat features, the end user experience relies heavily on the ability to automatically route traffic across the best network path.

With Internap’s Flow Control PlatformTM (FCP), CCP Games can prevent network problems from affecting the experience of their online gamers. FCP provides the ability to analyze IP network-bound traffic in real-time, identify which networks to send traffic by measuring round-trip times and bandwidth usage and optimize all traffic for performance and high availability.

Real-time visibility – FCP provides visibility into performance and network availability across numerous network and carrier providers and then analyzes the data to automatically route traffic across the most optimal network paths.

Management and reporting – FCP delivers insightful metrics and reporting tools that can be used to understand the condition of providers’ networks at any time. This can significantly reduce the need for manual troubleshooting.

Automatic traffic routing – Instead of waiting up to several days for network engineers to address issues with poor performance, FCP automatically detects network problems before they affect the customer. The result is higher availability of CCP Games’ platform and increased customer satisfaction.

For online gaming companies and other industries that rely on optimal online performance, having the ability to automatically navigate around network trouble spots is critical. All carriers experience outages, and a solution like Internap’s FCP helps alleviate these problems without manual troubleshooting. Whether your goal is to maintain competitive advantage, improve customer satisfaction or grow your business, you need a consistent, reliable platform that end users can trust.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Cloud trends: Bare-metal cloud maximizes security and performance

As cloud solutions have evolved from physical servers and virtualized environments to SaaS (Software as a Service), PaaS (Platform as a Service) and even IaaS (Infrastructure as a Service), companies today must navigate through a sea of different cloud options. While cloud computing provides organizations with increased flexibility and cost-effective IT Infrastructure solutions, some workloads and applications demand higher performance than the virtualization layer allows. This has led to the next step in cloud evolution: bare metal cloud, which removes the hypervisor and the performance impact created by virtualization.

In a recent edition of Tech Talk with Craig Peterson, Internap’s Director of Cloud Services, Adam Weissmuller, discussed the benefits of bare metal cloud and how it can maximize server efficiency and improve performance.

Bare metal cloud gives physical servers the same flexibility and benefits as highly virtualized cloud environments. From another perspective, bare metal cloud is giving automation and self-service some cloud capabilities, such as massive scaling, hourly billing and the ability to pay only for what you use.

Security – With no shared tenancy, you are able to use the entire server at the bare metal itself. There is minimal risk that someone else will get access to your server resources or your data since the bare metal servers are dedicated to a single customer. For many customers, multi-tenancy presents risks and in some cases is not compliant with industry or security regulations.

Performance – Bare metal servers will perform better simply because there are fewer things going on between the application and the hardware beneath it. Also, the single tenant environment allows for better performance because no one else is using it, as opposed to multi-tenant environments where others may be using some or all of the resources and slowing down your processes.

100 percent uptime – Managed Internet Route OptimizerTM (MIRO) allows Internap to optimize the network connections across multiple different providers. Meaning that, if there’s an issue somewhere out on the Internet, we can reroute and pick a better route for traffic based on where it’s starting from, and where it’s going. That allows us to provide 100 percent up time and the lowest latency connection at any given point for all of our customers’ traffic.

Whether you’re looking for a cloud environment or a bare metal server, choose the right platform based on your workload needs and application use case. With different types of cloud to choose from, it’s important to understand which one aligns best with your business goals.

Listen to the full Tech Talk with Craig Peterson interview to learn more about the benefits of bare metal cloud.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Los Angeles data center boasts high power density, performance and LEED certification

=When evaluating colocation providers, finding data center space that meets performance and cost requirements can be challenging. Facilities built using green design techniques with sustainability in mind are able to offer enterprise IP, managed hosting and colocation services without sacrificing environmental responsibility and efficiency. Internap’s Los Angeles data center demonstrates a commitment to sustainability while meeting customer demand for industry-leading colocation services.

Internap’s LA facility was planned in accordance with green design techniques, and followed the Energy Star Target Finder guidelines to ensure mechanical and electrical efficiency. This resulted in a 47% reduction in energy use. One of the largest costs associated with operating a data center is power, and using sustainable energy techniques can help reduce operating costs while meeting customer needs for high performance and availability.

As a result of green design planning, Internap’s LA data center has been awarded LEED Gold certification by the U.S. Green Building Council (USGBC). The facility already holds a Green Globe® certification, which it achieved shortly after opening in September 2012.

Some of the services available in the LA facility include:

High density power of up to 18kW per rack, allowing businesses to “upgrade in place” without having to pay more for a larger colocation footprint.

Hybrid hosting allows businesses to seamlessly connect colocation, managed hosting and cloud environments through a secure Layer 2 VLAN (Virtual Local Area Network).

Concurrent maintainability and resiliency ensure maximum uptime for your environment. More than just N+1, our infrastructure system is designed for the concurrent maintainability of generators, UPS and cooling modules.

As part of its commitment to reducing the impact on the environment, the LA data center was built with pre- and post-consumer recycled materials. During renovation of the building, Internap reused more than 99% of exterior structural components. The facility was also designed to reduce energy consumption through the use of high-efficiency lighting, automated lighting controls, air-side economizer cooling controls and high-efficiency equipment.

Thanks to green design planning, the LA data center is able to provide high performance and high availability without sacrificing environmental responsibility. When evaluating data center providers, choose one that demonstrates a commitment to sustainability, energy efficiency and performance.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Since moving to Singapore in 2010, I quickly realized that this is “Asia’s Silicon Valley”. But despite tremendous growth over the years, the little red dot still has a long way to go. Events like Walkabout Singapore help create interaction, idea sharing and awareness across the city’s tech scene. As a testament of our commitment to startups, Internap joined in the fun of Walkabout Singapore 2013.

So what is Walkabout? Well, the idea is simple. Once a year, all the tech companies in a given city open their doors and let anyone and everyone come visit. Students, geeks, the tech-savvy, the not-so-tech savvy and the just-plain curious – all are welcome to check out their favorite tech companies and see what happens behind the scenes.

This year, a total of 77 companies opened up their doors – up from 31 last year! Our Internap office had a variety of visitors. Some were just curious about what we do every day, while others wanted to learn more about the tech industry and Singapore’s startup scene. We even met a few guys that needed help solving real-world hosting problems!

Walkabout was started in New York City, and last year Internap helped bring it over to Singapore with our friends at Golden Gate Ventures. Unlike the Big Apple, Singapore’s offices can be quite far from each other. This made it impossible for people to meet everyone in a single day. To help with that, we slightly modified Walkabout Singapore to include a rockin’ after party so all of the participants and guests can mingle after hours.

I think it’s safe to say that after this year’s turnout, Walkabout is going to be a staple in Singapore’s tech community for many years to come. The official blog has an excellent breakdown of growth from last year. Not too shabby!

So if you’re just getting started and have tons of hosting questions, come chat with us. We focus on cost-effective hosting solutions that make it easy for you to scale, even on a tight budget. From advanced IP delivery to colocation, we’ve got businesses of all sizes covered around the world in over a dozen of our data centers.

While Internap’s services are synonymous with enterprise-grade solutions, the fledgling entrepreneurial startups are just as important as our bigger, more established customers. Best of all, we always give transparent advice with your best interests in mind, regardless of your company’s size.