Month: March 2013

If you follow March Madness basketball, you’re aware of this year’s Cinderella run – Florida Gulf Coast University has exploded onto the NCAA tournament scene. As the first 15-seed ever to reach the Sweet 16, FGCU is breaking all the rules and setting new records for sports fans and media to talk about.

If you follow March Madness basketball, you’re aware of this year’s Cinderella run – Florida Gulf Coast University has exploded onto the NCAA tournament scene. As the first 15-seed ever to reach the Sweet 16, FGCU is breaking all the rules and setting new records for sports fans and media to talk about.

Their magical run is also setting new records for their website. After their victory over Georgetown, Internet traffic to FGCU’s online admissions page jumped 431 percent — then the site crashed. No one could have predicted FGCU’s success in the tournament, so preparing for a surge of website visits was probably not on anyone’s radar – and understandably so.

But, predicting how well your web servers can handle an increase in Internet traffic is sometimes easier than predicting how far your team will go in the tournament.

When web servers get bombarded with a high volume of user requests, they can become overwhelmed and unresponsive. To prepare for these situations and improve website performance, a Content Delivery Network (CDN) can help. Using a CDN as part of your IT Infrastructure can reduce the risk of downtime and accelerate online performance in three ways:

Reduce latency – Each time a user clicks to view content on your website, the server has to process the request. With a large influx of site visitors, this can lead to slower response times and increased latency. A CDN can reduce the burden on the web server by storing cacheable content and distributing it directly to the end user.

Availability – Once your web server becomes overwhelmed, users can’t see any of your website content. But with a CDN, users can still access cacheable content in the event that the web server experiences an outage.

Scalability – The ability to scale means your website will be better equipped to handle traffic spikes, thanks to scalable storage capabilities. CDNs are also a security benefit because they offer protection from DDoS attacks that could leave servers vulnerable.

As evidenced by my busted bracket, I was clearly not ready for FGCU’s success in this year’s tourney. While we can’t help you prepare for surprise Cinderellas, we can help prepare your website for better performance and a more reliable online experience.

Learn more about how Internap’s Content Delivery Network can help your website deliver the optimum online experience.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Today’s online game developers face an increasingly global marketplace that is no longer restricted to just a few prominent regions. As consumers around the world embrace online video games, developers and publishers must deliver games to a variety of regions at launch. This presents a wide range of logistical and technological challenges which can be resolved with colocation services.

Today’s online game developers face an increasingly global marketplace that is no longer restricted to just a few prominent regions. As consumers around the world embrace online video games, developers and publishers must deliver games to a variety of regions at launch. This presents a wide range of logistical and technological challenges which can be resolved with colocation services.

Considering the dynamics of a multi-region launch

A console generation or so ago, most games were released in Japan, nearby parts of Asia, the United States and, to a lesser extent, in the rest of North America. In the past decade, activity has increased in the United Kingdom, Europe and Australia, where video games long held a place in the subculture but are now prominent. People in South America and even parts of the Middle East and Africa have also embraced video games to some degree. Within the span of 10 or 20 years, the video game industry has progressed from a popular type of toy that represented a cultural niche in the developed world to a universal mainstream media. As a result, game publishers must take a global approach to releasing new games.

When games were mostly a niche in much of the world, and released on disks or cartridges, games would often release at different times in various areas to spread out the burden. For example, a title may hit store shelves in Japan in May and become available in the United States at the end of June. With the rise of online video games and a global video game marketplace, these kind of delays are not as feasible. It is possible to restrict game use based on the location of a user to stagger the release, which is still done in some cases, but video game enthusiasts increasingly expect a game to be launched simultaneously, or close to it, around the world.

Making a multi-region launch possible in an era where almost every game includes online content, regardless of whether it’s hosted on the web, hinges on having web servers in geographically diverse locations to support solid performance in a variety of markets. Establishing multiple global data centers can be a major cost burden. Managing the logistics of each data center system, localizing the services and distributing the workforce properly can also be difficult. Turning to a colocation provider can be the answer, especially since scalability is a critical factor when releasing a new game.

Using colocation to support global release processes

Colocation eases the burden of a global game release by allowing developers and publishers to host content in third-party data centers located around the world. As a result, they do not have to invest in the actual facility space and instead can simply purchase the infrastructure they need, configure it and let it work to support end-user functionality. Many colocation vendors also offer managed services as an option, alleviating the various maintenance challenges of handling a distributed data center architecture.

Having services available in a variety of locations is necessary to support a global game release because distance can contribute to latency. Furthermore, spreading out the work load can balance performance and ensure enough space for gamers trying to find a server that works for them. Supporting this kind of infrastructure without the help of a third-party service vendor can be an overwhelming challenge, but colocation offers a cost-effective solution to the global launch of online games.

To learn more, download our white paper, Five Considerations for Building Online Gaming Infrastructure.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

When hearing the phrases “disaster recovery” or “disaster preparedness,” many people immediately think of how they can prevent or mitigate the downtime caused by an extreme weather event – such as a hurricane, earthquake, flood or tornado.

When hearing the phrases “disaster recovery” or “disaster preparedness,” many people immediately think of how they can prevent or mitigate the downtime caused by an extreme weather event – such as a hurricane, earthquake, flood or tornado.

But the reality is that these phenomena are rare, and the much more common causes of downtime are events like power outages, IT failures, and human error. Gartner projects that “through 2015, 80% of outages impacting mission-critical services will be caused by people and process issues.”

Even major global brands are not immune to man-made disruptions, as seen recently in these well-publicized outages: Facebook went down for 2 hours last May and saw its stock price drop nearly 6% the next day; the entire GoDaddy hosting network failed last September, causing millions of sites and emails to stop working; and, of course, the infamous Netflix outage last Christmas Eve which set off a firestorm of angry tweets from subscribers.

While a disruption to your business may not receive quite the same media coverage as the above, the impact can still be significant and, in some cases, disastrous.

For example, ask yourself the following:

- If your website or payment processing system went down, how much revenue would you lose?

- How much revenue would be lost if your employees couldn’t work at their full capacity because a critical system was unavailable?

- What would be the impact if your company was unable to comply with a regulatory audit because of system unavailability or data loss?

- What if a system outage meant you couldn’t meet service-level agreements (SLAs) to your customers or partners?

- How would your company’s reputation be affected if your critical IT systems were unavailable for more than a few hours?

Once you start quantifying the potential financial impact of downtime, it should be pretty clear why having a disaster recovery plan is so important.

For a deeper dive on the impact of downtime and guidance on disaster recovery delivery models, attend our April 25th Disaster Recovery webcast featuring guest presenter Rachel Dines, Senior Analyst, Forrester Research, Inc.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

What does it take to deliver a successful live streaming event? As an Internap partner, Jolokia provides support for delivery of live and on-demand rich media. In today’s blog, Mark Pace, CTO of Jolokia, shares details about a client’s recent live streaming event and also discusses the challenges that providers must address to successfully deliver streaming content online.

What does it take to deliver a successful live streaming event? As an Internap partner, Jolokia provides support for delivery of live and on-demand rich media. In today’s blog, Mark Pace, CTO of Jolokia, shares details about a client’s recent live streaming event and also discusses the challenges that providers must address to successfully deliver streaming content online.

In January 2013, three months of planning and testing ended with a brilliant reveal of a new automobile at the Detroit Auto Show. The live streaming event lasted a beautiful 11 minutes and was viewed globally over 600,000 times. While the stress involved matched the number of viewers, all our planning and testing paid off in the end and the event went as planned.

To deliver a successful online event to this many people, the video stream was shared via the manufacturer website, dealer websites from across the globe, and Facebook. We used Flash adaptive technologies and transmuxing to HLS, which provided the stream in a maximum of HD 1280×720 to both desktop and mobile devices. Using this adaptive technology along with the transmuxing allowed us to reach as many devices as possible under differing network conditions, including home cable connections and mobile viewers.

Finally, with our sights set on breaking half a million views, we knew we needed a solid global Content Delivery Network (CDN) with adaptive Flash delivery, transmuxing, stability and scale. Internap, our close partner for over a decade, filled these needs perfectly. And, in addition to supporting us through the purely technical requirements, they joined us for many of the planning calls and remained on the video streaming tech conference call before and during the event. This kind of support gave us and our auto manufacturing customer the peace of mind needed to survive the stress that goes into a production of this sort.

Challenges to successful live events

Let’s face it – mobile and live streaming capabilities only work consistently on one vendor’s mobile devices right now. Treading the waters that are now… read this… worse than they were when video streaming first became available in the ‘90s is unbelievable. Waiting for MPEG-DASH to emerge as the standard for online mobile delivery is equally disturbing, when all the technologies to get live streaming working to almost all mobile devices are available now. Not that any of the industry giants will read this blog and take this to heart, but seriously, H.264 and HLS work now, and you can implement that while waiting for our greater needs and dreams to be serviced by DASH. Need I mention that almost all devices have H.264 hardware decoders in them specifically for this?

Lessons learned

Because of the inconsistent live streaming capabilities on mobile devices right now, we would want to dive much further into our device detection before our next event. This is extremely painful because there are hundreds of devices, each with differing versions of software. Putting together the matrix for these devices will take months, but considering the viewership levels, it will be worth it. Having reliable fallback videos that continue the video experience and warn users to wait for the on demand content are a must on the next go.

Moving targets: Social media obstacles

Social sites are rapidly evolving beasts that show no chance of slowing their furious pace. Because social networking sites make regular updates and changes, the window of time to test out the latest technologies on these sites is short. This will continue to be a pain point going forward, and having a well-developed test plan that can be modified easily and executed on short order is currently the only stop gap for this challenge.

Delivering a successful live streaming event requires careful planning, and the flexibility to adapt your plan to emerging technologies. Internap’s global CDN allows us to stream transmuxed VOD and deliver high-quality online events with low latency, which in this case resulted in more than 600,000 views. The support of a trusted partner also helps address the challenges regarding mobile capabilities and social media site changes.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

MySQL bills itself “The World’s Most Popular Open Source Database” and with good reason. Although an open source database solution might not be the choice for every enterprise, MySQL does provide high performance, scalability and flexibility for only a fraction of the cost of other database servers solutions. There are three major ways to leverage the power of MySQL in central business functions developing online applications, data warehousing, and enterprise-level custom applications.

Online Applications: Powering Web 2.0

As part of the LAMP open source stack (Linux, Apache, MySQL, & PHP/Perl/Python), MySQL has been foundational in developing many of the world’s fastest-growing social applications. By facilitating performance, scalability, and reliability, MySQL and the LAMP stack enable transactional and interactive web-based applications to scale-out and meet the needs of growing users and data. Some of the biggest names on the net are harnessing the power of MySQL for their Web 2.0 applications:

Online worlds—Neopets, Second Life

Web syndication & feeds – Digg, Feedburner, Google Reader

Blogs – Blogger, WordPress, LiveJournal

Social Networking – MySpace, Friendster

Wikis – Wikipedia

Customized search engines – Craigslist, Trulia, Technorati

File sharing – YouTube, Flickr, Snapfish

Data Warehouse: Keeping Information Safe

Of course, one of the primary functions of any database is to keep your data stored, organized, and safe for easy access. MySQL offers an array of storage engines, both developed in-house and externally, which allow you to create, retrieve, update, and delete (the CRUD acronym—the four basic functions of persistent storage) your data as needed. MyISAM is a common internal storage engine from MySQL developed specifically for data warehousing applications, although it is non-transactional. InnoDB is a popular externally developed search engine, which supports both ACID-compliant transactions and foreign keys. Other storage engines, such as Archive, Memory, CSV and Merge from MySQL are designed towards specific data warehousing functions. Choosing the best storage engine for your given database application is essential to successful data warehousing.

Enterprise-level Custom Applications

MySQL is also a remarkably flexible database for a variety of custom enterprise-level applications. MySQL has been effectively utilized in the IT industry and others for a variety of client, employee, and operational functions— even those that have traditionally been handled by proprietary database software. MySQL Enterprise provides many of the features and support necessary to facilitate transactional and customer relationship management applications such as:

Call centers

Helpdesk applications (including support ticketing systems)

Financial applications (including automating loan applications and asset management)

Research, documentation, and testing

Human resources applications (including absence management and vacation tracking applications)

Unfortunately, all-too-often databases are a source of headaches, with lost and corrupted data and inefficient functionality. But, when effectively utilized, your database can be foundational in the execution of your business’s most central functions, from HR to customer relationship management to value-driving operations.

If you are looking for complex hosting solutions, including hosting your own MySQL database, look no further than INAP. With hundreds of complex MySQL systems running on our network we understand what is needed to make your database run fast, smooth, and secure. For MySQL hosting, go with INAP.

Updated: January 2019

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

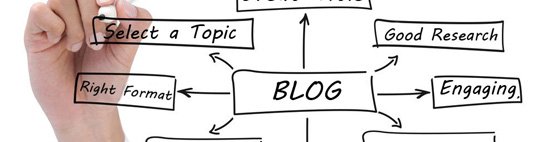

Awhile back my boss asked me to lead up creating a blog program for our company. Having only written a few posts in my time, I immediately realized I had a lot to learn. After-all, if I was going to be advising other people at my company on how to write great blog posts, then I better damn-sure know what I’m talking about. So I went out and started my research. I read countless articles and pulled only what I considered to be the most essential best practices for success. I wound up with seven; and the information I’m sharing with you is the same information I sent out to our entire company. I call it “The Blog Behind the Blog.” I hope it helps.

1. Choosing the Proper Length

In writing a blog post, generally speaking you don’t need to make your blog posts too long. In fact, most of the time 300-500 words is often plenty; sometimes even less if the content is good. When constructing your paragraphs, you ought to consider keeping them short – in the 3-4 sentence range, utilizing bullet points, and using simple language that your audience can understand. In the age of Twitter, and with so many people reading information on their mobile devices, small chunks of content is what most people are accustom to. That’s not to say there’s not a place for longer blog posts too, but in either case you need find a way that people can easily digest it.

2. Selecting the Right Format

When formatting a blog post it’s often best to utilize sub headings to segment your blog post so that it’s split up in a way that people can easily process it. In fact, many readers are likely to only skim your blog before deciding whether they want to actually read it. So be certain to use bold headers to help indicated whether your article contains content that’s of interest to them.

Some popular but effective ways to do this are creating a “how to” guide or a tutorial. Popular formats include Top 10 tips. The fewer points you make, the more content you should probably use to support each point. Be sure to use good sub headings that summarize these points and add a paragraph or two to support each one. Another option might be to make a longer list, e.g. “50 ways to….” This format will only require minimal content to support each point; perhaps just a sentence or two.

What if you have a lot to say? In that case, a multi-post series might be the way to go. If you elect to write multiple posts, be sure to link them together for the ease of the reader. Lastly, as you’re writing, ask yourself, “Is what I’m writing meant to be a blog post?” Perhaps instead you are writing content that the reader should be downloading in addition to reading your post, to get more details on the subject – like a white paper or case study.

3. Creating a Good Title

The title of a blog post can have significant impact on whether or not people want to read your post. Good titles will set the reader’s expectations from the start. A common best practice is to utilize a title that suggests that your post will provide some kind of value to the reader. You can convey this be utilizing a title that promises to teach or educate the reader to become more knowledgeable of a subject they are already interested in. A good blog title will also sometimes let the reader know that it contains something useful or that they shouldn’t ignore. For example let’s say your audience was interested in running a Marathon. Some examples of good titles might be:

- 6 Lessons Learned from Running the Marathon.

- 25 Ways to Become Better at Running Long Distances.

- How to Become an Expert at Long Distance Running.

- 10 Things You Can’t Ignore if You Want to Finish a Marathon.

4. Engaging your Audience

The start of your blog post is where you’ll want to set the tone early by making a strong impression in that first paragraph. Set the readers expectations so that they know what they are getting into, and don’t be afraid to have an opinion. In fact, adding personal stories can help earn you credibility with the audience and to truly engage them. Short stories are often very effective if you can tie them back to the point you’re trying to make. You can also provide real life or theoretical examples for the reader to consider. Additional considerations should also be how you can best enhance and support your post visually: pictures, diagrams, charts, graphics, videos, quotes, statistics, etc. No matter what images you choose, try to make certain it’s both relevant and useful, or at the very least funny.

5. Selecting a Topic

So how do you come up with a topic? Well a great place to start is by asking questions. What questions need to be answered? What are people asking about? Odds are if one person wants to know it, there are likely others. A question and answer format may even be an appropriate way to present the content you have. If you’re the content expert, you could position yourself as the one answering the questions; perhaps via an interview.

6. Creating Calls to Action

An important aspect to try and incorporate into any post is the call to action. You want your post to try and elicit a response from your audience if you can. Getting the audience to answer a question or comment and discuss your post is great way to engage them. Starting dialogues is what can help lead to sales and further boost your credibility as a thought leader on the subject.

7. Conducting Good Research

One thing that many great blog posts having in common is good research. Don’t be afraid to go do additional research for your post. Statistics can be a great source of interest for many readers. Creating and administering a survey to analyze might result in some interesting findings for you to share with your readers. There are always resources available to help you out, but be sure to check out what other blogs in your industry are writing for inspiration as well. Lastly, be sure to enroll other people in your writing process. Talking out your ideas and sharing drafts with others is a great way build and shape your blog post into something better.

When you first start writing blog posts, it can be intimidating. But remember, you’re the content expert and you have knowledge to share, and if you don’t have that knowledge, then go out and find it and then share it. Finally, realize that writing good blog posts can take time, but in the end good content is worth it. One good post will trump a dozen of lesser, mediocre value and go a long ways towards increasing your credibility as an author.

Updated: January 2019

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

At the 2013 Georgia Technology Summit yesterday, Internap was honored as one of the Top 40 Innovative Technology Companies in Georgia. The awards, presented by the Technology Association of Georgia (TAG), recognize “an elite group of innovators who represent the very best of Georgia’s Technology community.”

The hundreds of technology leaders in attendance at the event also represent something else – they reflect larger trends taking place in the world of business today. A quick glance at the full list of winners shows the increasing demand for cloud, mobile and social technologies. Healthcare, finance, risk management, green energy and supply chain management are also well-represented on this list.

Emerging Trends

If these are the areas where innovation is taking place, what conclusions can we draw? The list shows an increasing demand for cloud services, mobile and social capabilities. This suggests that businesses across a variety of verticals are looking to technology for more cost-effective and efficient ways to work, and Georgia companies are rising to the challenge.

At Internap, our cloud hosting and colocation services offer ways to decrease operating costs by offering efficient alternatives to traditional IT Infrastructure. Our Atlanta data center was recently awarded Green Globes certification, and our solutions allow organizations to reduce capital expenses while increasing business agility.

The impressive list of Top 40 Innovative Technology Companies also suggests that Georgia is well-positioned for future success. Internap is proud to be honored as part of this list.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

March Madness: How CDNs help you watch college basketball at work

Dear Bosses of College Basketball Fans,

An intense fever called March Madness is sweeping through workplaces across the country. Do not be alarmed if your employees gather around their computer screens to watch streaming media of NCAA tournament basketball games. This may result in random cheering, fist-pumping, hi-fiving and possibly crying tears of joy or defeat.

This week, college basketball fans will bring their school pride to their workstations and watch their favorite teams compete to become the next National Champion. This is why Bob from IT will come to work wearing a curly white wig and his face painted blue. Or why Susan from marketing will arrive in her college basketball uniform from 15 years ago. But did you know all this madness is made possible through the magic of Content Delivery Networks (CDNs)?

You: But what is a CDN and how does it work?

Me: I’m glad you asked. Let me explain.

A CDN is an effective way to distribute large files and stream live or on-demand media to end-users anywhere in the world. With scalable storage and the ability to handle traffic spikes, a CDN provides a high-quality viewing experience even when large numbers of people are consuming live streaming events at the same time. Basically, this means your employees can stream live basketball games online all day.

Here at Internap, our global CDN helps reduce the load on origin infrastructure to provide fast and reliable distribution of media assets, resulting in low latency and high performance.

So, when you’re patrolling your office floor later this week and you happen to see a college basketball game on someone’s screen instead of that finance spreadsheet you need by 5pm, no worries. Thanks to CDNs, it probably wasn’t going to get done anyway.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Managed hosting helps businesses handle mobile device challenges

Mobile device ecosystems are contributing to major changes in how businesses get the job done. If you look around your office, you’ll probably notice that most people are still at their desk like always, but many of them may be using their personal mobile devices on the side. Smartphones will often be pulled out at meetings to clarify data. The rise of “bring your own device” is changing the enterprise IT landscape, and companies have to adjust accordingly.

Considering the implications of BYOD

When employees are allowed to use their personal mobile devices in the workplace, almost every facet of IT has to change. Instead of handling technology from a perspective where it is tightly controlled by IT and given to users based on their needs, workers are bringing the technological tools they want into the workplace and expecting IT to adjust to them.

This creates an operational climate that is, in many ways, beyond what most IT departments are equipped to handle. Mobile devices demand flexible, integrated, scalable and almost universally accessible application access. Unless you have a private cloud that is orchestrated to support scalable mobile use, you probably need some third-party help.

Taking advantage of managed hosting

Managed hosting providers can take on your mobile application architecture and ensure end-users have access to what they need at any time. To a great extent, this means the managed hosting solution is used to establish a solid foundation for mobility and the connectivity infrastructure needed to ensure that critical applications and data are delivered to users effectively. Managed hosting offers improved performance, flexibility and support, along with on-demand scalable storage and managed data protection.

If you want to control how users access apps and data, you can deploy governance systems within your managed hosting plan to get more control over what employees can access. While managed hosting is traditionally used for web hosting, you can use the technology to support web-based apps and services and deliver them to end users efficiently.

Is your business ready to take on the challenge of mobile devices in the workplace? Learn more about the benefits of incorporating managed hosting into your organization’s mobile strategy.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Many IT and operations professionals focus on establishing processes and procedures to get systems back up and running after a disruption, but it’s also important to have the right IT Infrastructure in place before disaster strikes. As part of your 2013 IT strategic planning, disaster recovery and prevention capabilities should always be one of the factors you evaluate.

Fortunately, it’s easy to mitigate disruptions when you have the right foundation for your infrastructure. Data centers, colocation services and cloud hosting are designed with business continuity in mind, plus you get the added benefit of improving internet performance. Let’s look at three elements of disaster resistant design and infrastructure that can help you prepare and sometimes prevent disruptions from happening.

Redundant power circuits

Internap colocation facilities help mitigate the likelihood of power outages by providing a second circuit path. Having redundancy options and backup power systems in place can help prevent a disruption before it begins. When evaluating providers, make sure their redundant network devices don’t connect to the same patch panel, Uninterruptible Power Supply (UPS) system, breaker or other infrastructure.

Routing Control

Whether you experience a major outage due to a natural disaster, or are simply having internet performance problems in your local area, our Managed Internet Route Optimizer™ (MIRO) will dynamically seek out the fastest route for optimal internet speed. This results in minimal impact on your business operations, even if your main internet provider goes down.

State-of-the-art fire prevention

Our data centers are equipped with the most advanced fire detection and control technology. We also have strict rules in place to prevent dangerous situations such as power surges from becoming a larger problem.

Don’t overlook the importance of disaster recovery during your 2013 IT strategic planning – put the right preventative measures in place before something unexpected happens. If you’re making decisions on new technologies or services that affect your infrastructure, be sure to evaluate their disaster recovery capabilities.

Building the right IT foundation can help you prevent disruptions and avoid lost revenue, waning customer confidence and costly maintenance. The ability to recover your data and maintain business continuity after a disaster is critical to the success of your business.

At Internap, we go to great lengths to mitigate disasters. To learn more about maintaining business continuity, check out our ebook, Data Center Disaster Preparedness: Six Assurances You Should Look for in a Data Center Provider.